Designing Haptics for Multichannel Device | Project Esther

In the latest rendition of CES , Razer showcased Project Esther : The world’s first HD Haptics Gaming Cushion. Interhaptics is a software company specializing

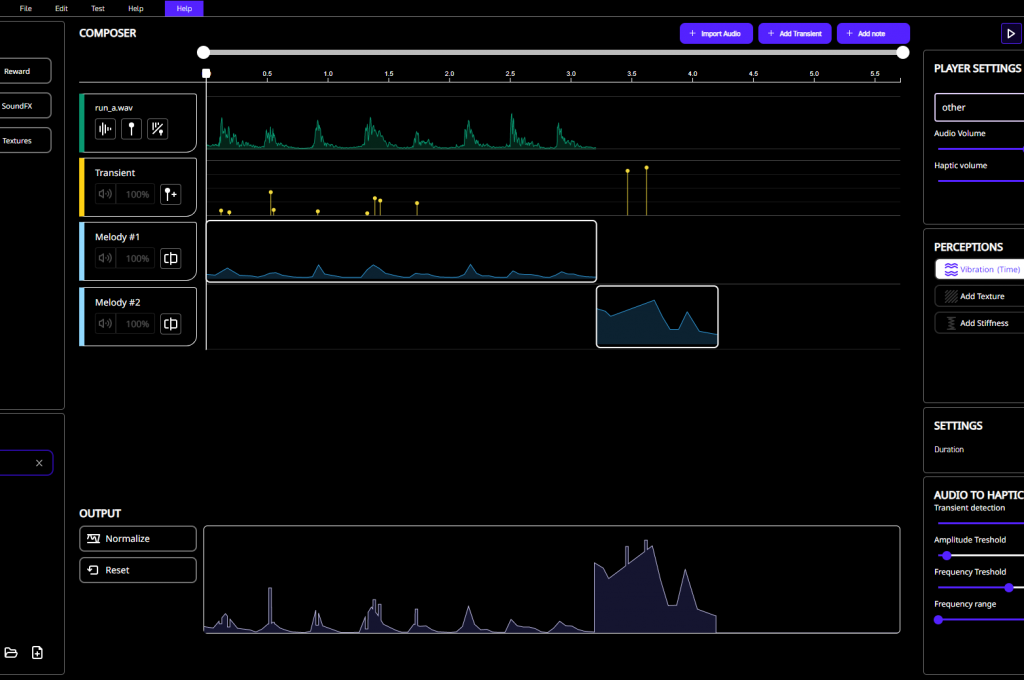

The Interhaptics Engine is a presentation engine translating the haptic effects into control or parametric signal for the supported haptic devices. It abstracts the complexity of the haptics device language allowing it to extract the maximum performances for the supported platform.

The Interhaptics engine is a real-time haptics rendering process that can support a variety of use case (spatial haptics, event based, 3D interactions, etc.)

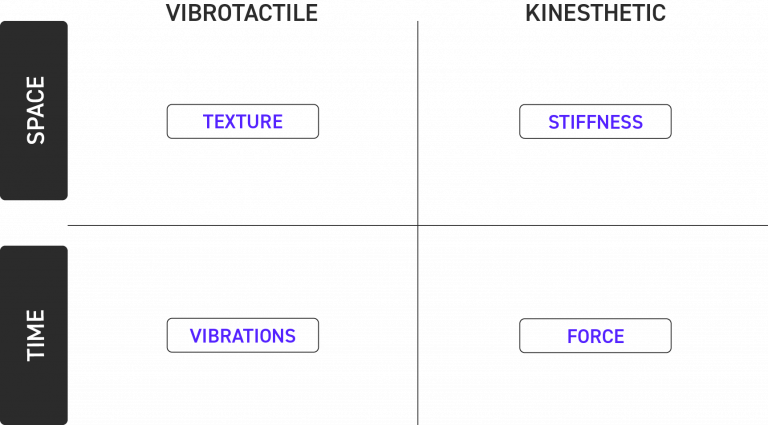

Interhaptics takes inspiration from the human perception of the sense of touch. There are 20-25 discernible sensations that humans can perceive with the sense of touch, and existing haptic devices can render convincingly just 3 of them: Vibrations, Textures, and Stiffness. Each of these perceptions has one solid perceptual characteristic on its basis. We extracted and modeled them.

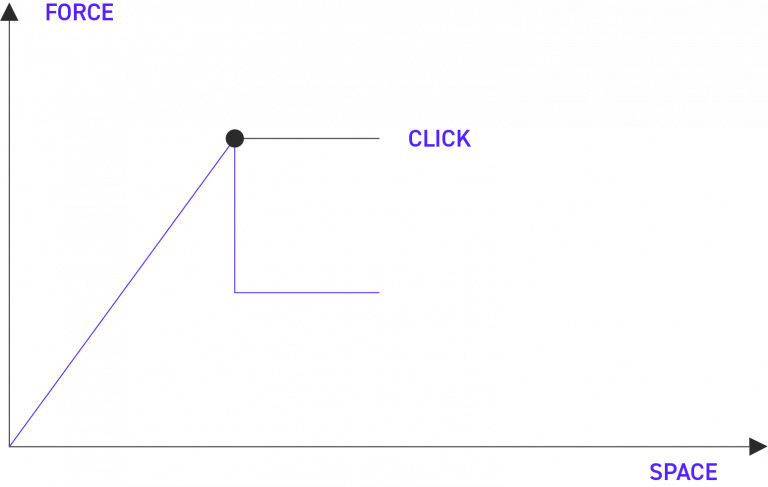

Both Textures and Stiffnesses are perceptually encoded in space (the faster you move, the faster the sensation happens), while the vibrations and force feedback are encoded in time. Let’s take the example of a button. The sensation grasped by the button is perceived as a function of the applied pressure, then a click happens, and the force suddenly drops down.

This behavior is intrinsic to how humans perceive touch sensations. All the algorithms and concepts included within the Interhaptics Engine are computed in real-time to give the best possible haptic experience to the users.

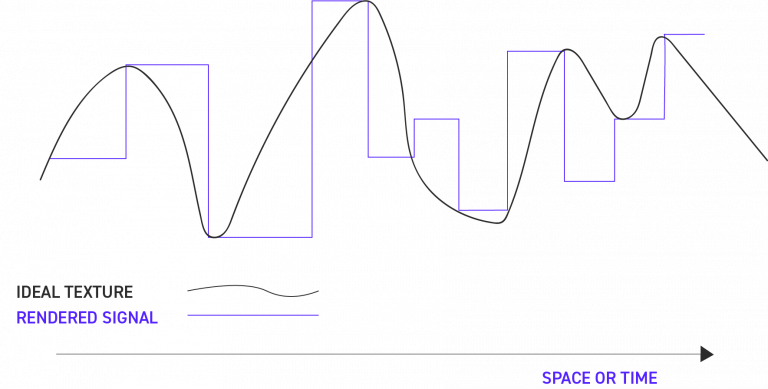

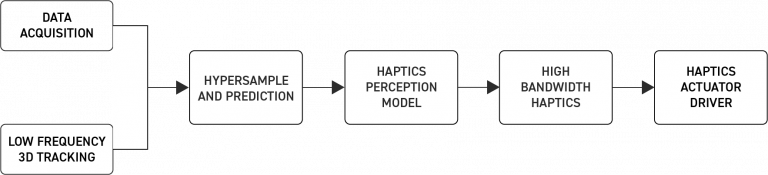

There is a well-known problem in the haptics field referring to signal processing theory: the data acquisition rate of the system determines the accuracy of your processed signal. Position acquisition is fundamental to render textures and stiffnesses, and XR and mobile systems have position acquisition rates up to 120 Hz. Where is the catch? Haptics, to work perfectly, requires rendering frequencies in the 1 kHz region. Here we encounter the well-known problem of under-sampling: you can have the best haptics actuator on the planet, but if you cannot control it fast enough, it is pretty useless.

(see here for a broader academic discussion of the problem and case-specific solutions)

This approach allows Interhaptics to create a perceptually accurate haptics rendering, far above the ability of the tracking system to acquire the position. The method works because we considered touch perception and the limit of human motor behavior within the rendering algorithm.

Interhaptics solves this problem in a general manner. We included a model of human motor behavior and haptics perception within the Interhaptics Engine. Interhaptics uses this model to hyper-sample the human position and give a stable haptics perception for spatial and time-based haptics.

This approach allows Interhaptics to create a perceptually accurate haptics rendering, far above the ability of the tracking system to acquire the position. The method works because we considered touch perception and the limit of human motor behavior within the rendering algorithm.

Building haptics into an application has always been a problem. The asset and scripts developed can hardly be transported in a new project for a different platform.

The Interhaptics SDK addresses this problem. It allows building for haptics, and the Interhaptics Engine manages the device complexity allowing a scalable way to create haptics assets and include them in your content.

How do you include haptics in your applications?

There are two separate ways to include haptics in applications. The first approach is a physics-based simulation of contact and forces (touch and object, feel haptic feedback). The second approach is based on the feedback generated following events: click a button, and feel predetermined feedback for confirmation.

The Interhaptics Engine was made for the purpose of supporting all use cases where haptics needs to be integrated. Whether you need haptic effects to be event-based, collision-based, interaction-based, or completely customized, the engine can digest all the necessary data to render the exact effect needed in a precise context. The flexibility of the Interhaptics Engine allows great scalability in terms of SDK creation and deployment. You can use the Interhaptics Engine to output a signal adapted to the detected device. Do you need a verticalized solution for a specific experience? You will be able to build exactly what you need on top of the base engine and SDK to reach your objectives.

All these concepts together make the Interhaptics Engine a unique piece of technology providing a transparent interface for application developers and final users. You can scale the great experience you design for your final users and reach them on 3B devices.

In the latest rendition of CES , Razer showcased Project Esther : The world’s first HD Haptics Gaming Cushion. Interhaptics is a software company specializing

Gamers love watching live streams, seeing their favorite players make incredible plays and get the wins – but what if we could take the action to the next level,

Interhaptics, the leading provider of HD haptic software solutions for immersive and interactive applications, powers Razer Sensa HD Haptics. We have partnered with Grewus, a