Interhaptics collaborates with Simon Frübis and Hochschule Magdeburg-Stendal University on multisensory feedback of haptics for VR/AR/MR. Moreover, the study looks at how to integrate sound into haptics with the Haptic Composer from Interhaptics.

Haptics for VR/AR/MR: the Importance of Multisensory Feedback in Spacial Computing

The improvement of immersion is one of the key issues in haptics for extended reality. In a multisensory VR experience, a virtual body can be perceived as one‘s own, which is also called “Sense of Embodiment” (SoE). Through appropriate visual and tactile stimulation, this technology can be used to manipulate the sense of body possession. “The illusion of a virtual hand” and the “rubber hand” are experiments that have already proven this technology.

Embodiment is a concept that refers to cognitive psychology and translates to thoughts, feelings, behaviors, and perceptions based on our sensory experiences and our body positions.

To achieve this illusion, there are lots of different tools like VR gloves, bracelets, exoskeletons, or suits. These devices have been built to fill the gap between reality and virtuality. However, it cannot be denied that integration into the consumer market is difficult for various reasons (size, price, etc.). Accordingly, user experience (UX) and user interface (UI) design in applications with multisensory feedback in haptics for extended reality has not been explored enough.

Therefore, the collaboration with Simon Frübis will cover the integration of sound to haptics for VR/AR/MR with Interhaptics, as well as supporting the design of haptic feedback for applicable haptic devices.

Simon Frübis is an interaction design student at the University of Applied Sciences Magdeburg-Stendal. In his thesis, he investigates multisensory feedback for AR and VR. By creating virtual wearables and including haptics for extended reality and audio, Simon evaluates the effects of multisensory feedback on the user experience (UX).

“I see strong potential in the combination of AR with our most natural input device — our Hands. Multisensory feedback allows us to interact quickly and precisely to enable more immersive handinteractions. We still need to explore working solutions by creating a new design pattern language. Interhaptics offers a great platform and foundation to design such patterns and to identify best-practices.” Simon Frübis — passionated about interaction design, immersive technologies, spatial and physical computing.

Interhaptics is a software company specializing in haptics for VR/AR/MR. Interhaptics provides hand interactions and haptic feedback development, and deployment tools for extended reality and mobile applications. Interhaptics’ mission is to enable the growth of a scalable haptics ecosystem. Interhaptics strives to deliver top-notch development tools for the XR, mobile, and console developer community, and the interoperability of haptics-enabled content across any haptics-enabled platform.

The first prototype shows how Simon integrated low-budget haptic devices into VR

With the help of Interhaptics’ Haptic Composer, Simon will be able to design custom haptics for extended reality, in particular for sound-driven actuators. A device like this separates low-frequencies from audio to transform them into haptic feedback. Once placed on certain body parts, it can convince the brain that it is exposed to high acoustic energy by using the auto-completion principle. It works the same way as when you look at a picture of a sunny beach; you can almost smell sunscreen and hear the waves clashing. This is because our brain automatically retrieves the different aspects of the experience to complete it by itself.

“Sound to haptic devices are the easiest entry point for OEM manufacturers in the haptics market. They are a powerful and expressive category, and we are looking forward to exploring with Simon, their ability to enhance VR experience”

Eric Vezzoli — Interhaptics CEO

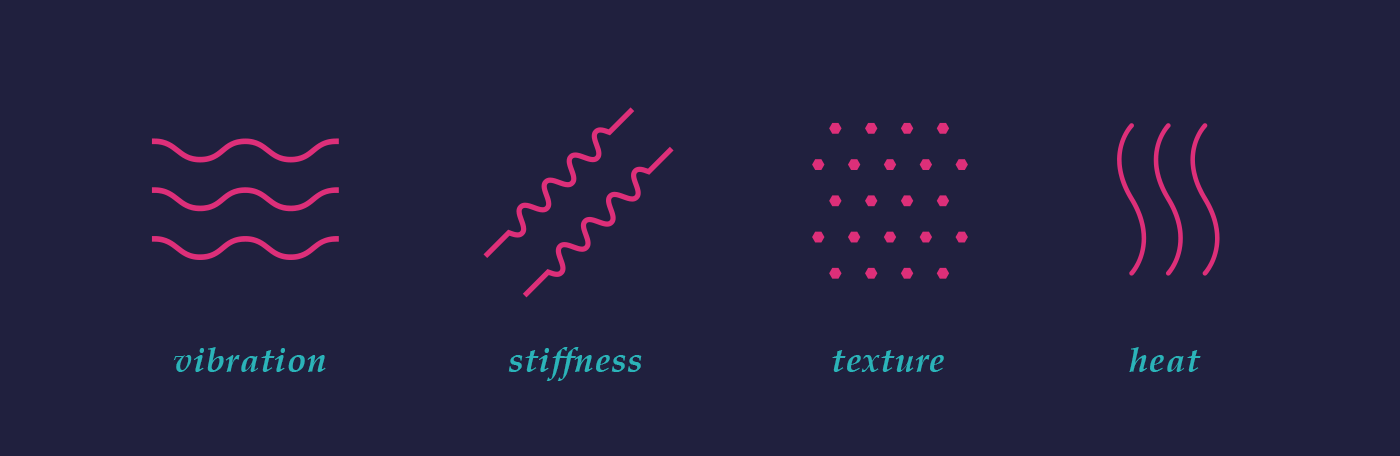

Today, to create haptics for VR/AR/MR with Interhaptics, one creates an asset (haptic material) within the Haptic Composer which stores the representation of haptics in 4 perceptions (Vibration, Textures, Stiffness, and Thermal conductivity).

The collaboration will cover the integration of sound to haptics for extended reality with Interhaptics.

You can, if you want, try haptics for VR/AR/MR in our demo available on Oculus Quest. If you want to add haptics for extended reality to your projects, you can now join more than 900 students who have studied our Udemy course and start designing with Interhaptics!

If you have any questions, join our haptic designer community on discord.