Haptics has been living a second renaissance in the last year, with several markets implementing haptic feedback technologies to solve different human interaction challenges. Today, we see haptic feedback design technologies applied to automotive, gaming, IoT, Virtual and augmented reality, wearable, smartphones, and other related markets.

These markets are sustained by a great availability of large bandwidth haptics actuators. With the upcoming arrival of promising full bandwidth technologies like piezoelectric, ultrasonic, or electroadhesion haptics technologies, OEMs and device manufacturers are planning more ambitious haptics roadmaps and implementations.

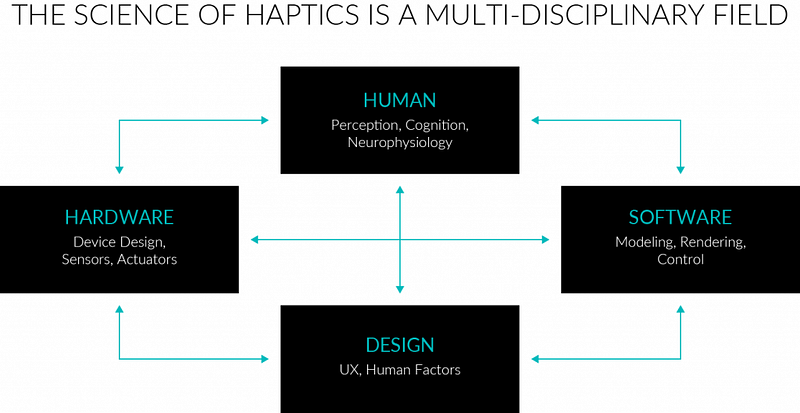

Often, this promising opportunity is faced with the challenge of practically implement haptic feedback design solutions within products. A satisfactory haptics experience is composed of several key elements that are dependent on human cognition, user experience design, hardware, firmware implementation among them.

One of the key points to take into considerations while considering the design of a product with haptic feedback is the haptics technological stack. I will share here a brief recap of this key blog post from Chris Ulrich, CTO of Immersion Corporation.

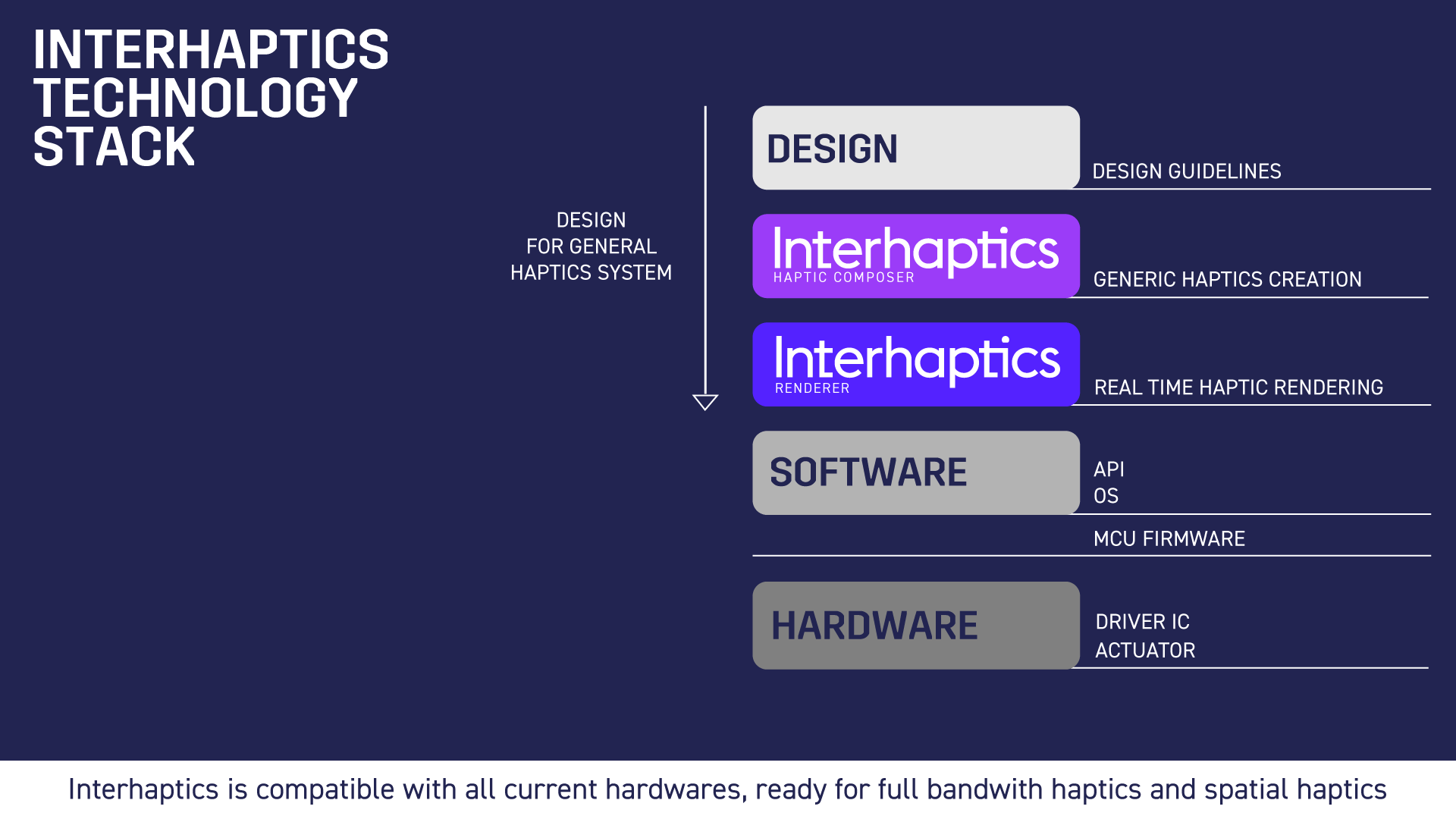

A high-level analysis of the current haptics stack can be visualized in the following slide.

The stack is characterized by a verticalization of software and design process for the underlying APIs and codecs. This was sufficient in an era where the number of haptic feedback applications was limited to mobile phones and a few others, with limited expressivity capability of the ERM haptics actuators.

Great haptic feedback design experiences were reserved for researchers or R&D departments.

With the current implementation of large bandwidth haptics actuators within different platforms we are seeing a great segmentation of the software implementations within the haptics stack. Just to name a few we have:

Apple: Core haptics (Mid 2019)

Google: Android Vibrate (Various iterations)

Khronos: Open XR 1.0 (Kronos) (Oculus quest is doing its own thing)

Any other haptic feeback device manufacturer has proprietary SDKs and APIs that participate in the segmentation of the ecosystem. Count here each one of the 10 different Haptics exoskeletons for XR, the PS5, the Nintendo Switch, and many other devices, from university labs up to the most well-founded haptics startup.

What is key to understand about these APIs is that they fulfill the same role. They cover the functionality to implement a haptic feedback experience in a digital medium. What they are factually generating is a great fragmentation of the ecosystem where the codec, creation tools, and design guidelines are scrambled.

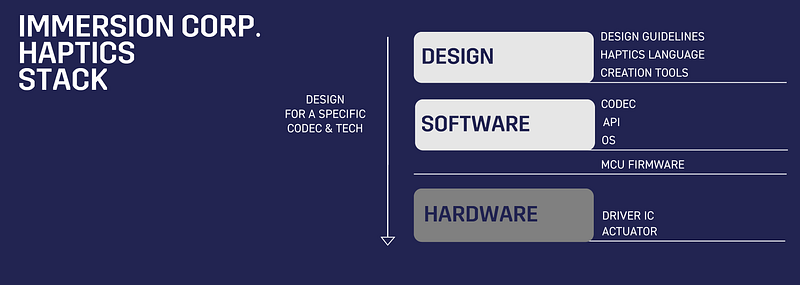

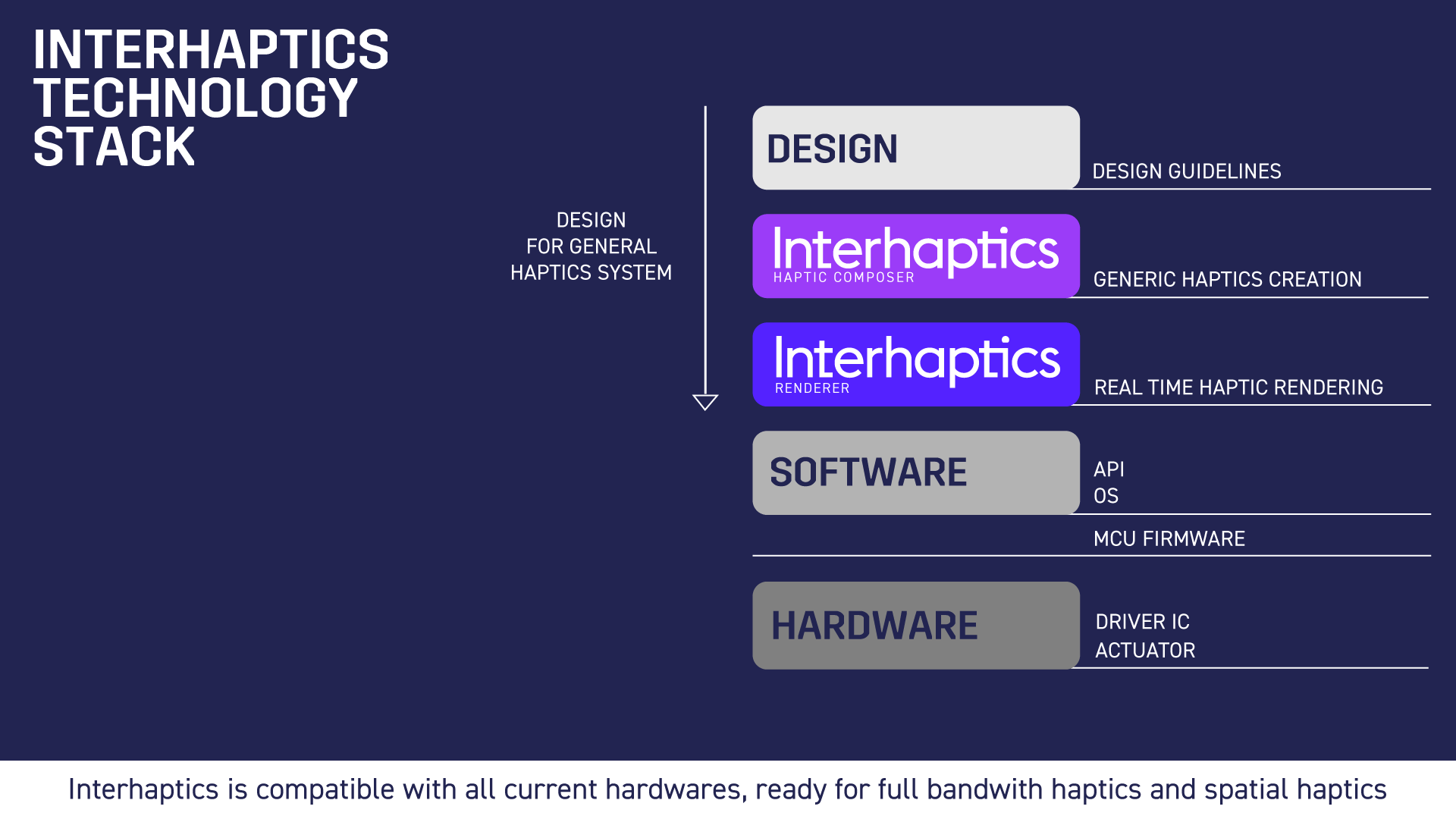

This was the starting point for Interhaptics, and this is the problem that Interhaptics was born to solve with haptic feedback design.

Interhaptics replaces the Haptics language, Codec, and Verticalised Design tools for unique haptics platforms.

We reach this milestone by including a specific Haptics Rendering software that synthesizes the haptic feedback during the execution of the digital application, mobile gaming explosions feeling? No problem!

Nothing is pre-compiled or stored in libraries, everything happens in real-time. Get an exoskeleton with 30 actuators between force feedback, LRAs, and Peltier cells? Again, no problem.

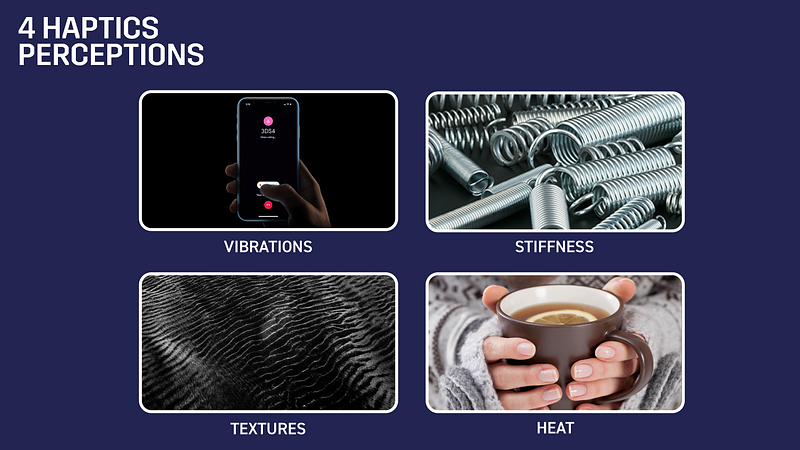

Interhaptics runs with 4 perceptual haptics rendering processes in parallel. You get Force Feedback, spatial texture rendering, vibration pattern rendering, and heat transfer control.

The haptics technology stack including Interhaptics is much more similar to audio or video technology stacks, where editing and rendering software are generic and independent from the underlying hardware technology. In audio and video, rendering is based on human perception. We did the same for haptics.

This cornerstone piece of technology allowed us to obtain 3 goals:

- Interhaptics is independent of specific haptics APIs.

The same haptics software runs iOS haptics, Android, or XR applications. You get a 0dB, real-time rendered, full bandwidth haptics signal ready to be communicated to your devices. Even better, you get the prediction and refresh of the haptics signal for the upcoming application frames. So 0 latency. The signal management you have to implement resides on logical conditions to meet specific API constructs. It is always easier to filter a full bandwidth signal.

2. The Haptics composer can create haptics experiences for any haptics device.

Even the latest fancy piezoelectric actuator can be a user at its maximum capability.

We have a community of creators using the haptics composer, tons of documentation, and learning resources. These cover and will cover design guidelines for the different haptic feedback applications on the market. Do you want a dev kit for a new haptic feedback technology? Get it integrated with Interhaptics, and ship it to your customers.

3. Deploy haptics software at scale

Interhaptics software architecture is modular and developed to be deployed at scale. You can get a great haptics experience in the lab, or on millions of devices in the hands of the customer.

The time is mature for the Haptics industry to grow up and enable designers and creators to leverage the wonderful communication potential that Haptics technology owns. Interhaptics wants to play a major role in this process by enabling OEMs and haptic feedback technology manufacturers to implement great haptics, cut costs, and reach a larger community. We are doing it right now, check out our work with Senseglove. We are doing it in the Haptics Industry Forum association, working to define the haptics standards and leading the haptic feedback design section.

Want to do haptics? Get in contact.