Interhaptics is a software company specialized in Haptics. Interhaptics provides hand interactions and Haptics feedback development. Moreover, it provides deployment tools for extended reality, mobile, and console applications.

This November, we are introducing Interhaptics newest feature of hand tracking for extended reality on the Interaction Builder: the snapping. What difference does it make? Well, you will be able to create ever more realistic and accurate interactions of hand tracking for VR/AR/MR. Adding a haptic layer to these interactions will increase the immersion level of any solution.

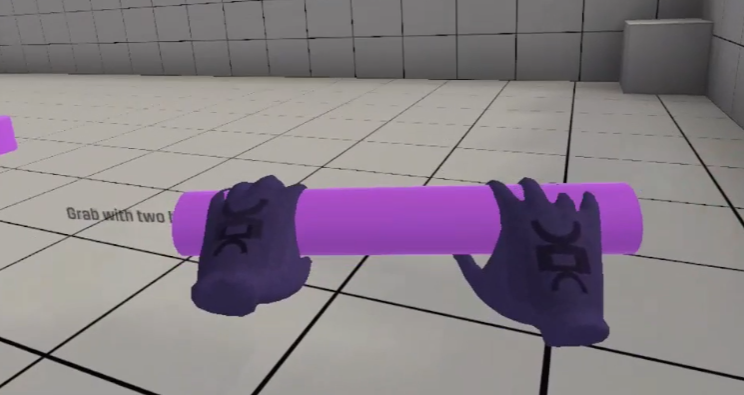

The Interaction Builder already includes a wide range of interaction primitives that you can use to enhance your hand-tracking for extended reality. Among them, you can find different hand manipulations like grabbing, moving, rotating, or sliding objects. Today we are here to expand the interactions portfolio and to introduce a new feature of hand tracking for VR/AR/MR: the Snapping. By adding it to the library, it will increase the haptic experience quality.

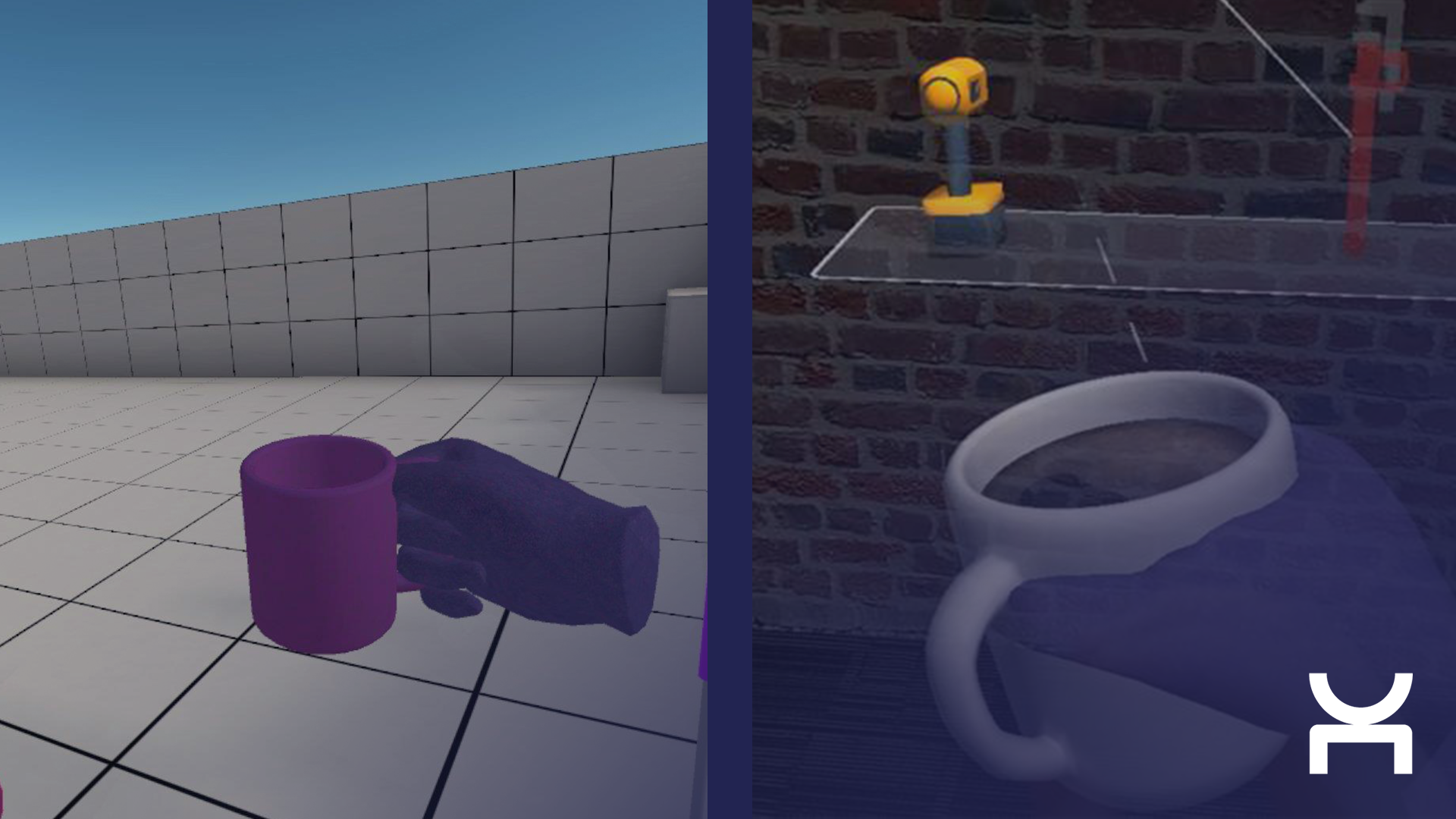

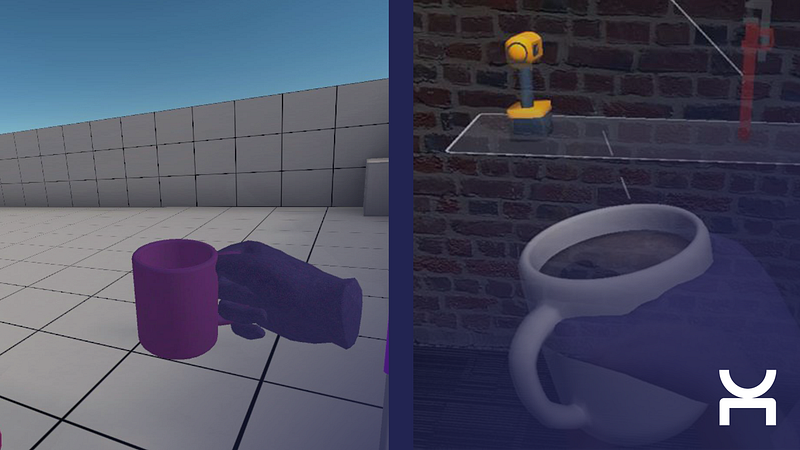

The newly introduced Snapping feature in the Interaction Builder allows the customization of the hand pose or gesture in the interaction. The user can precisely place each finger and the whole hand around the shape of virtual objects as one does in real life.

What is the problem it is addressing in hand tracking for VR/AR/MR?

With interactions in XR, and especially with hand tracking for extended reality, the objective is to make it “feel” right. In order to achieve that, the virtual world you are interacting with should be as close to the real world as possible. This means visually, audibly, and tactile-wise. In practice, for haptics, it means adding haptic feedback to enhance realism and immersion. For visual, this means matching the body movement in real life and in the virtual experience. Indeed, users need to experience what is naturally expected, in order to believe it and make the whole experience more realistic.

Let’s take a use case of a training session where the user is learning how to use specific equipment, like a dashboard. In this context, you will see your hand curling up around the steering wheel, just like you are doing in real life. Interactions feel much more natural and they behave as the user is expecting them to behave. Therefore, this sort of hand tracking for VR/AR/MR increases the immersion of the end-user.

The snapping: the latest hand tracking for extended reality

Interhaptics latest feature of hand tracking for extended reality, the snapping, will perfectly fit in any XR experience that requires hand manipulations. For example, during VR training, sports simulation, gaming, or even showrooms, the way the hand is placed to grip a lever will be reproduced almost as in reality. It is the same when the fingers are grabbing a handle to carry out an action.

As our previous features, the sapping will be available cross-platform, on all Interhaptics supported tracking devices including Oculus Quest, Ultraleap, and HoloLens 2. It will also support Unity 3D engine’s latest version Unity 2020+. All will be included inside the Interhaptics suite.

We cannot wait to see all your amazing hand tracking for VR/AR/MR created with Interhaptics. If you would like to collaborate with Interhaptics and integrate or hand-tracking for extended reality or haptic devices, you can contact us directly here. Stay tuned to all exciting and new announcements follow us and make sure to subscribe to our monthly newsletter.